Tech

AI future: Nvidia boffin hopes ‘everything that moves will eventually be autonomous’

Video The GenAI Summit 2024 opened at the Palace of Fine Arts in San Francisco, California, on Wednesday, and the people, who came to hear about artificial intelligence, had made a mess of things.

Around 0900, as things were getting underway, a large crowd of attendees waited outside the venue in disorganized lines, held up as staff scrambled to find badges. This reporter was admitted after mentioning his media affiliation, without any verification challenge or scrutiny of identification. Everyone just wanted to get on with the show. Inside, admission to the VIP-only AGI keynote in the theater was similarly lax.

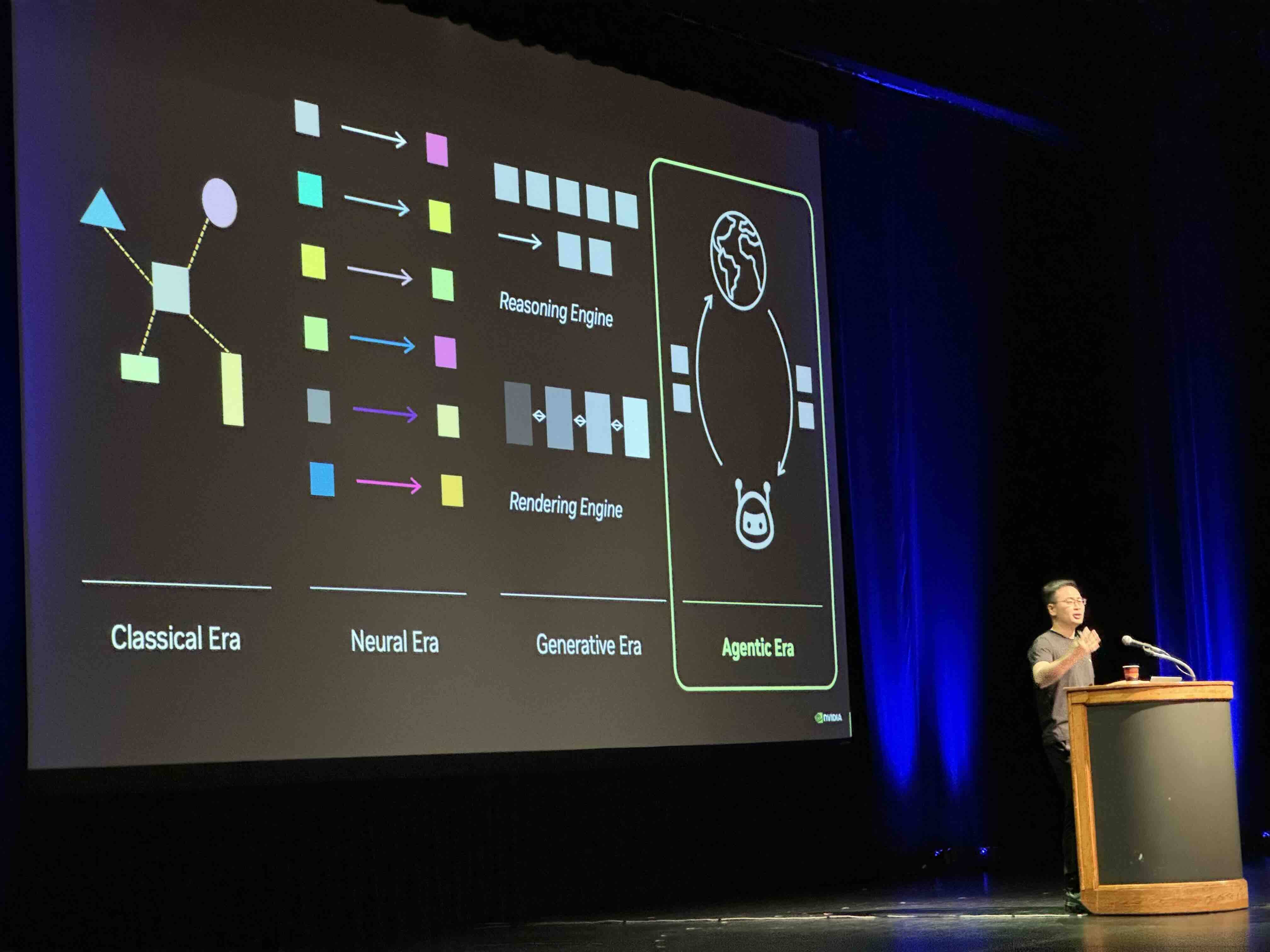

Jim Fan, senior research scientist at Nvidia and lead of its AI Agents Initiative, opened the show by revisiting the history of artificial intelligence, starting with Claude Shannon’s chess machine Endgame. Various milestones were mentioned along the road to the “agentic era.”

To begin at the end of Fan’s presentation, the agentic era is where AI tech is headed, toward the development of software agents that orchestrate how foundational models interact with other models and systems.

The “agentic” era, Fan contends, is the next technological step after the “generative,” “neural,” and “classical” eras of AI.

“I believe in a future where everything that moves will eventually be autonomous,” said Fan, without reflecting upon the potential implications.

Fan is trying to realize that vision through his work at Nvidia’s GEAR Lab, where GEAR stands for Generalist Embodied Agent Research.

A generalist agent, Fan explained, needs to be able to survive, navigate, and explore an open-ended world. It needs to have vast knowledge of that world. And it should be able to do pretty much any task.

“First, the environment needs to be open-ended enough because the agent’s capability will ultimately be upper-bounded by the environment complexity,” said Fan. “And the planet Earth we live on is a perfect example, because Earth is so complex that it allows an algorithm called natural evolution over billions of years to create all the humans in this room.”

Massive amounts of data are also required, said Fan, “because it’s not possible to explore from scratch. You need some common sense to bootstrap the learning.”

Also, he said, you need a foundation model powerful enough to learn from all these sources. “And this train of thought lands us in Minecraft,” said Fan.

Through Minecraft and related projects like MineDojo, which consists of a simulator, database, and agent, Voyager, a lifelong learning agent for Minecraft, Eureka, an agent for training robots, MetaMorph, and Isaac Sim, Fan believes technologists will be able to train foundational agents to the point that they can perform a vast array of useful tasks.

Minecraft can be used as a simulator to teach agents how to perform specific tasks. And with Isaac Sim, that training can be done incredibly quickly.

“Isaac Sim’s greatest strength is to run physics simulation at a thousand times or more faster than real-time,” said Fan.

In other words, the path to get from chatbots to robots that can do useful tasks in the real world gets much shorter with simulation tools that can cram years worth of training into days. In fact, for one demonstration, teaching a robot hand to spin a pen in its fingers, the software would outperform most human pen-spinners if the hardware were up to the task.

“There’s actually no real five finger hardware hack in the world that can have so much force and agility to spin a pen,” said Fan. “So we’re still waiting for hardware providers to catch up with Eureka.”

But for some applications, like teaching a robot dog to walk and maintain its balance atop a deformable yoga ball, foundation agents look promising.

“I believe training foundation agents will be very similar to ChatGPT,” said Fan. “All language tasks can be expressed as text in and text out. And ChatGPT simply trains it by scaling it up across lots and lots of text. And very similar here, the foundation agent takes as a prompt an embodiment specification and a language instruction and then it outputs actions.”

“The foundation agent is the next chapter for our GEAR Lab.”

The robots are coming. ®