Tech

Do you really need that GPU or NPU for your AI apps?

Kettle There’s no avoiding AI and LLMs this year. The technology is being stuffed into everything, from office software to phone apps.

Nvidia, Qualcomm, and others are happy to push the notion that this machine-learning work must be performed on an accelerator, be it a GPU or an NPU. Arm on Wednesday made the case that its CPU cores, used in smartphones and other devices throughout the world, are ideal for running AI software with no separate accelerator needed.

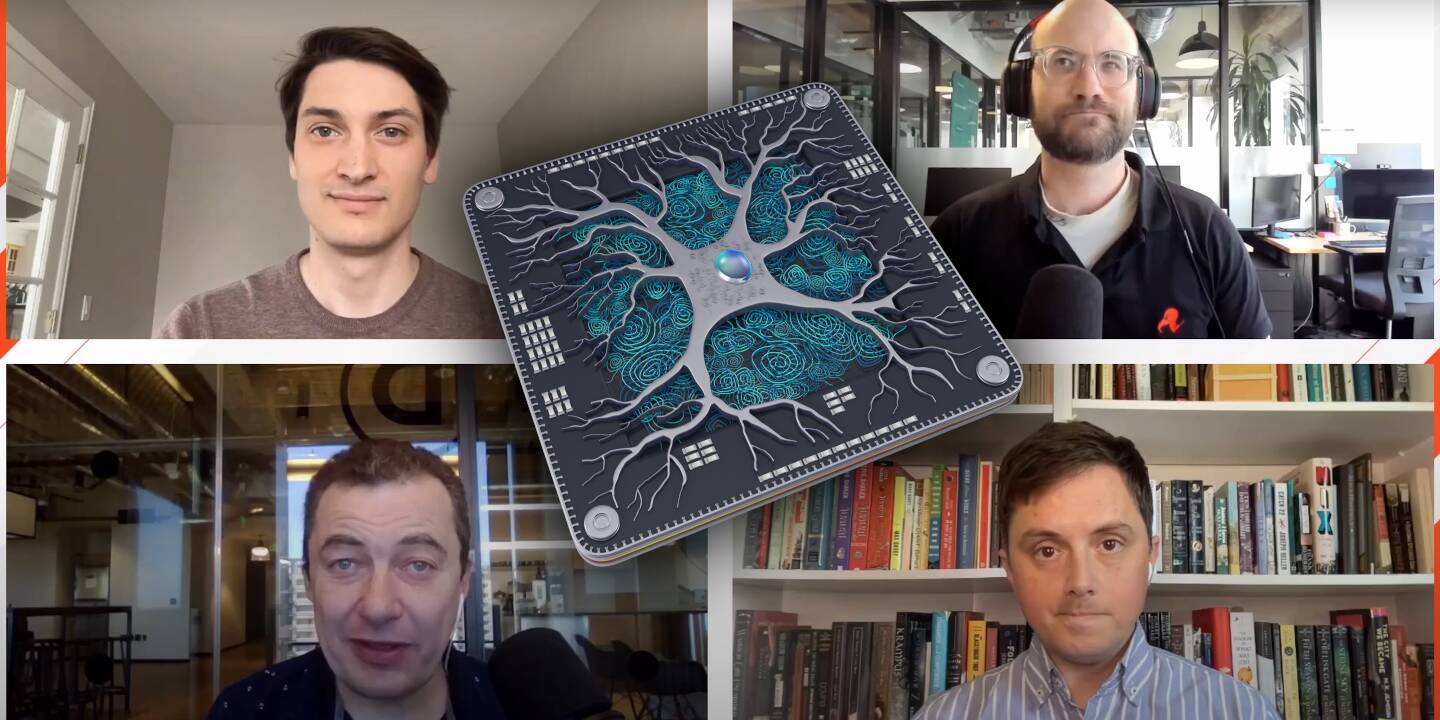

For this week’s Kettle episode, which you can replay below, our vultures discuss the merits of running AI workloads on CPUs, NPUs, and GPUs; the power and infrastructure needed to do so, from personal devices to massive datacenters; and how this artificial intelligence is being used – what with Palantir’s AI targeting system being injected into the entire US military.

Joining us this week is your usual host Iain Thomson, plus Chris Williams, Tobias Mann, and Brandon Vigliarolo; our producer and editor is Nicole Hemsoth Prickett.

For those who yearn for just audio, our Kettle series is available as a podcast via RSS and MP3, Apple, Amazon, and Spotify. Let us know your thoughts, too, in the comments. ®